This post is the second of two devoted to an idea of David Wallace’s: applying Google’s PageRank algorithm to the APDA placement data.

- Part 1

- Part 2

- Source on GitHub

Last time we looked at the motivation and theory behind the idea. Now we’ll try predicting PageRanks. Can students who care about PageRank use the latest PGR to guesstimate a program’s PageRank 5 or 10 years in the future? Can they use the latest placement data?

The Data

We’ll use a somewhat different data set from last time, since we need things broken down by year. Our data comes now from the APDA homepage, where you can search by PhD program and get a list of jobs where its graduates have landed.

Unfortunately the search results are in a pretty unfriendly format. This means doing some nasty scraping, a notoriously error-prone process. And even before scraping, the data seems to have some errors and quirks (duplicate entries, inconsistent capitalization, missing values, etc.). I’ve patched what I can, but we should keep in mind that we’re already working with a pretty noisy signal even before we get to any analysis.

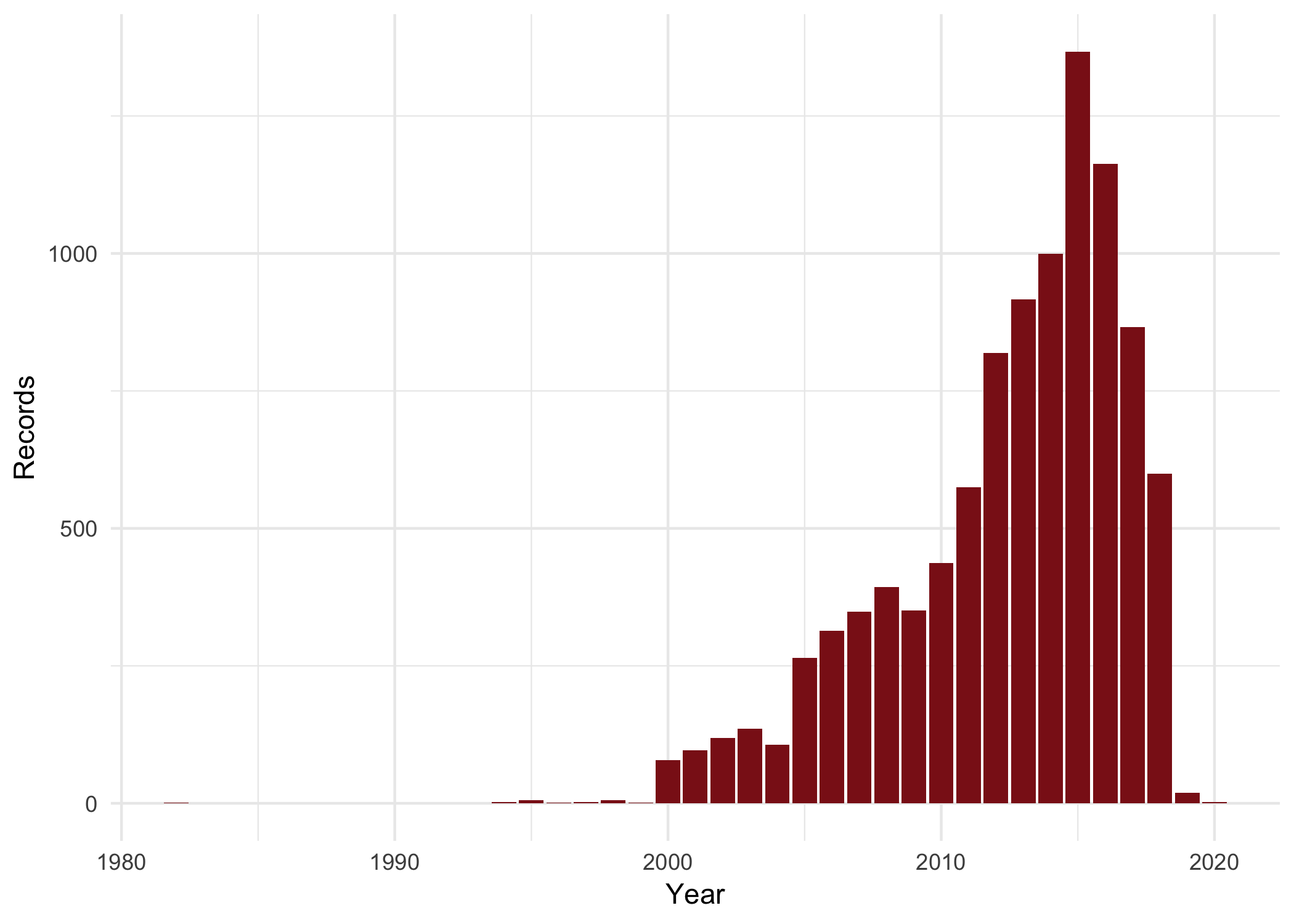

A quick poke around before we get to the main event: how much data do we have for each year? The years since 2010 really dominate this data set.

That’s going to be a problem, when we try predicting future PageRank based on past PageRank. Ideally we’d like to have two data-rich periods separated by a 5-year span. Then we can see how well a hypothetical prospective student would have done at predicting the PageRanks they’d face on the job market, using the PageRanks available when they were choosing a program. But we don’t have that. So we’ll have to live with some additional noise when we get to this below.

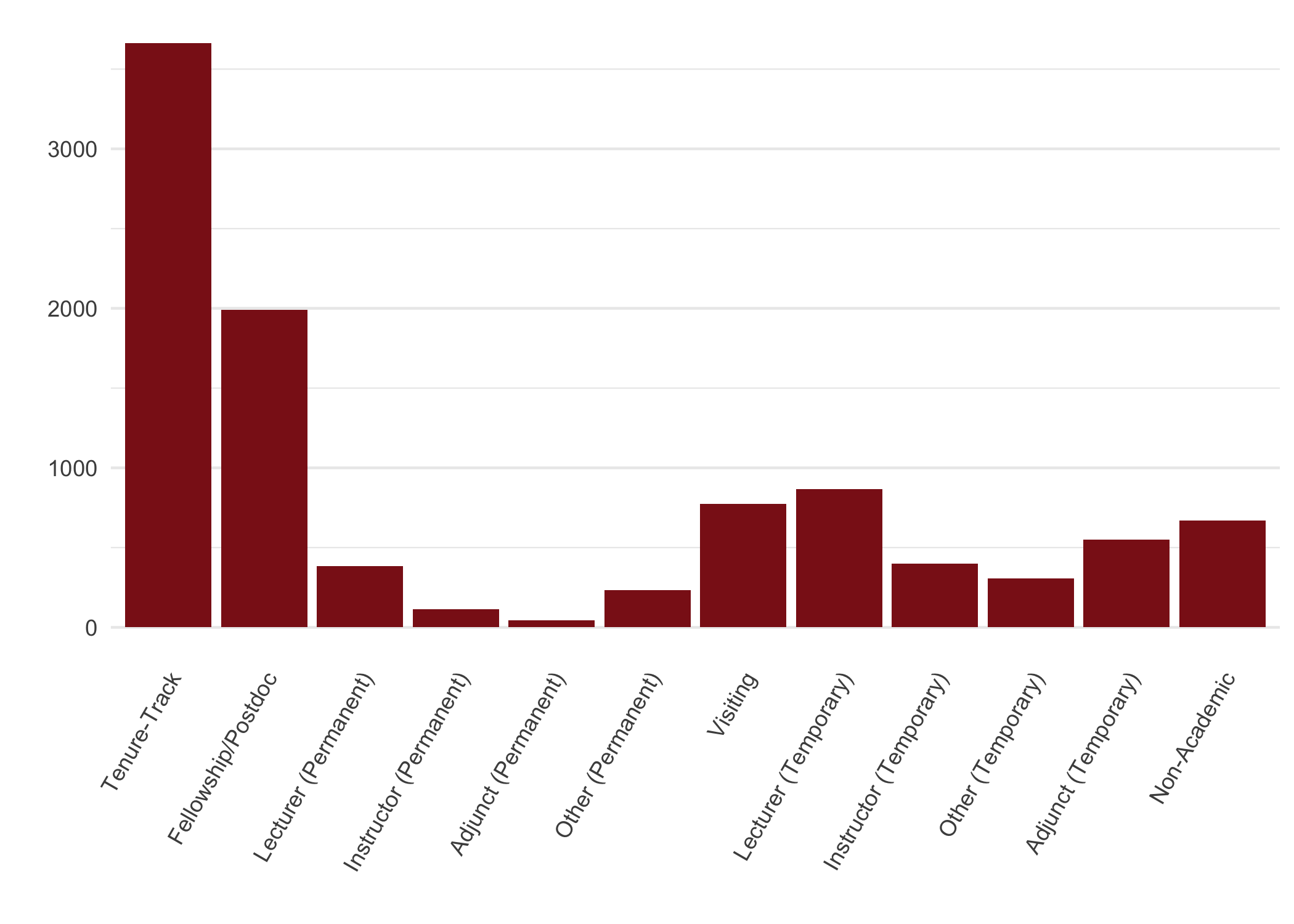

For now let’s turn to placement type. The APDA tracks whether a job is TT, a postdoc, temporary letureship, etc. TT and postdoc placements dominate the landscape, followed by temporary and non-academic positions.

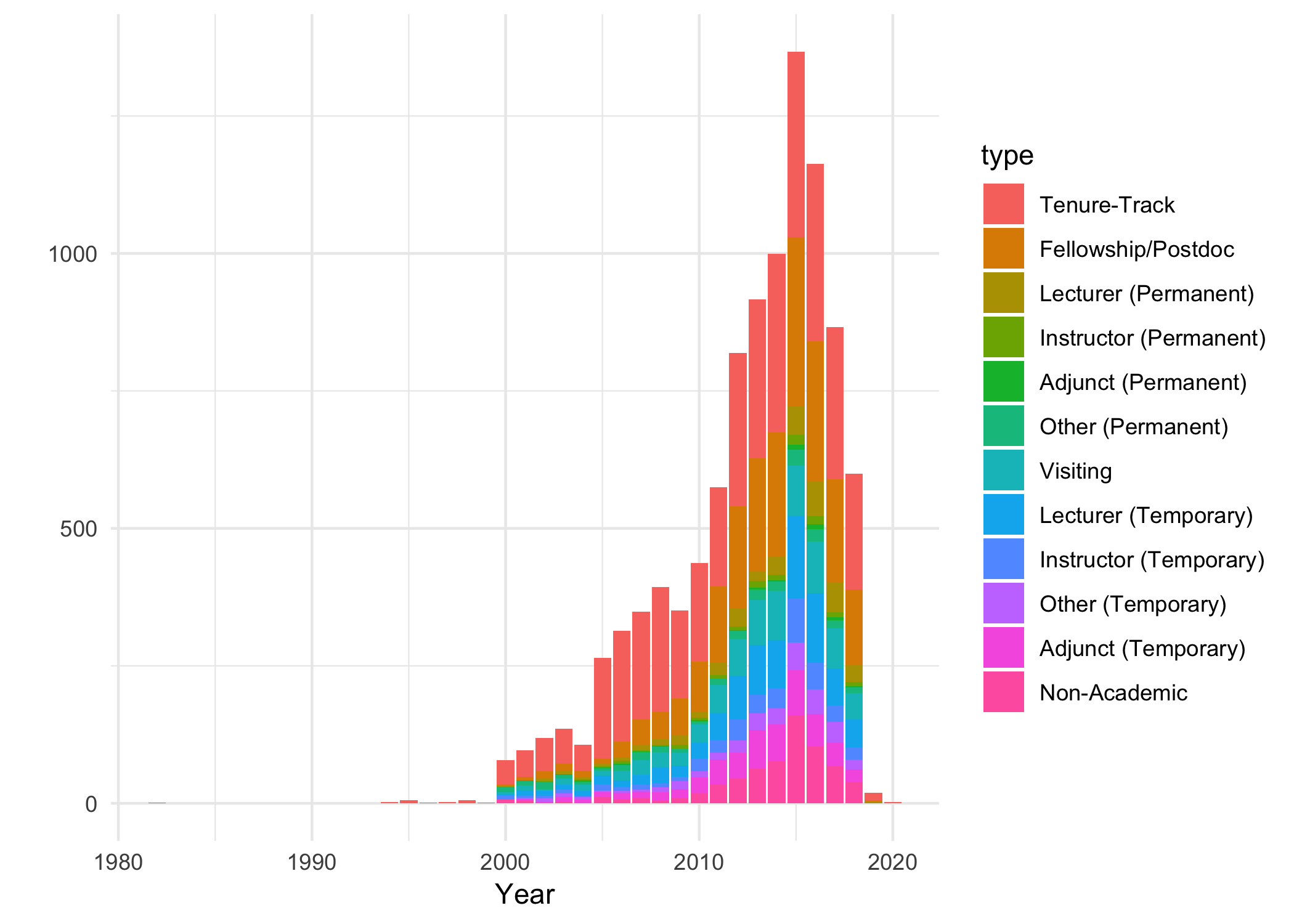

Placement types change dramatically over the years though. From the early to late naughties, TT changes from being the dominant type to a minority.

Is this the story of the Great Recession? That’s likely a big factor. But our data gets spottier as we go back through the naughties. So a selection effect is another likely culprit.

Now let’s turn to predicting PageRanks.

Predicting PageRank from PGR

We’ll calculate all PageRanks on a five-year window (a somewhat arbitrary choice). So the “future” PageRanks—the numbers we’ll be trying to predict—are calculated from the placement data in the years 2014–2018.

For now we’ll count postdocs as well as TT placements, for continuity with the last post and with Wallace’s original analysis. We’ll rerun the analysis using only TT jobs at the end.

Given these choices, here are the top 10 programs by PageRank for 2014–18.

| Program | PageRank 2014-18 |

|---|---|

| Princeton University | 0.0437282 |

| Stanford University | 0.0434163 |

| New York University | 0.0408766 |

| University of Oxford | 0.0374294 |

| University of Arizona | 0.0367260 |

| Harvard University | 0.0323110 |

| University of Pittsburgh (HPS) | 0.0296616 |

| Massachusetts Institute of Technology | 0.0278274 |

| University of Chicago | 0.0277885 |

| University of Toronto | 0.0260106 |

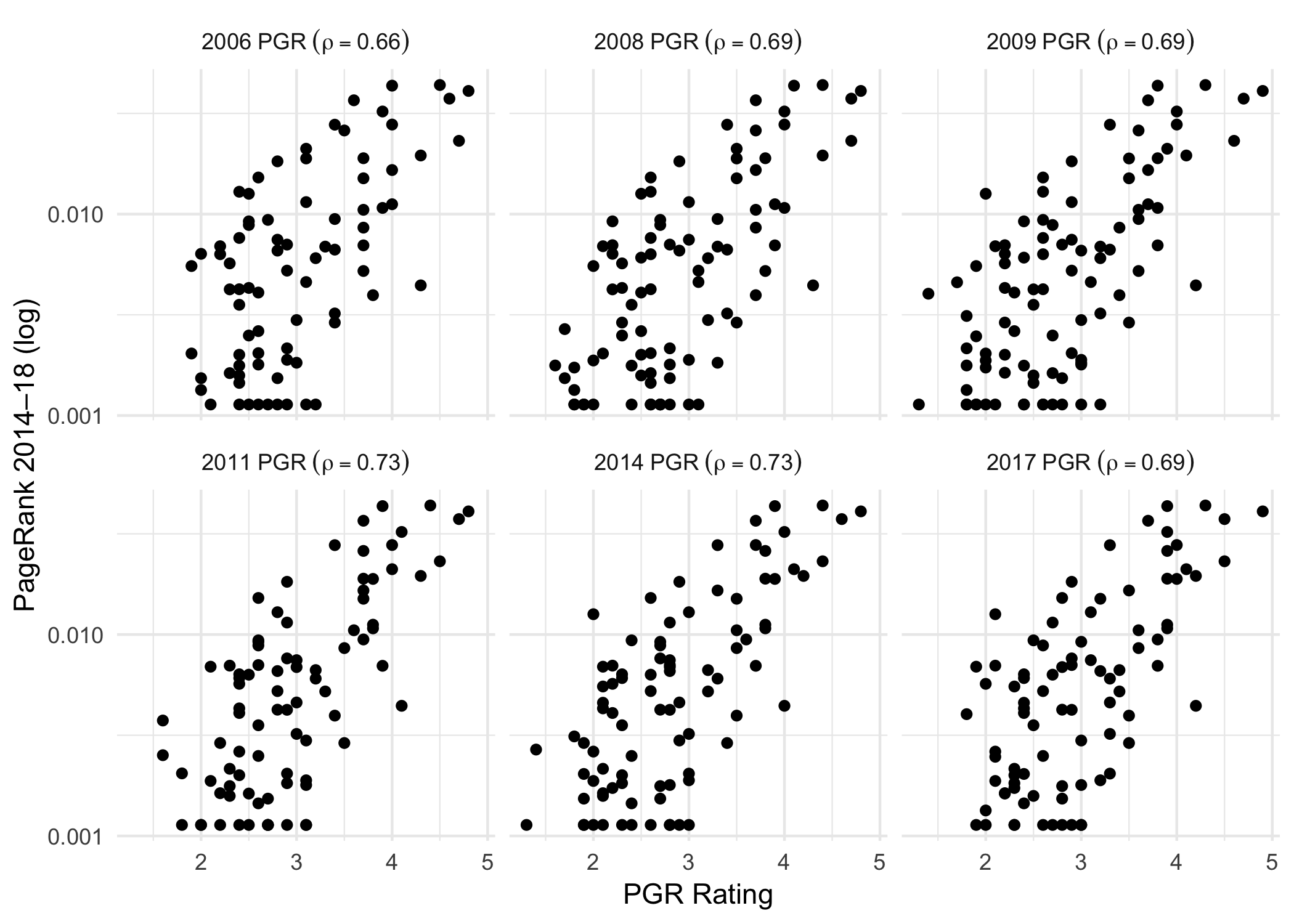

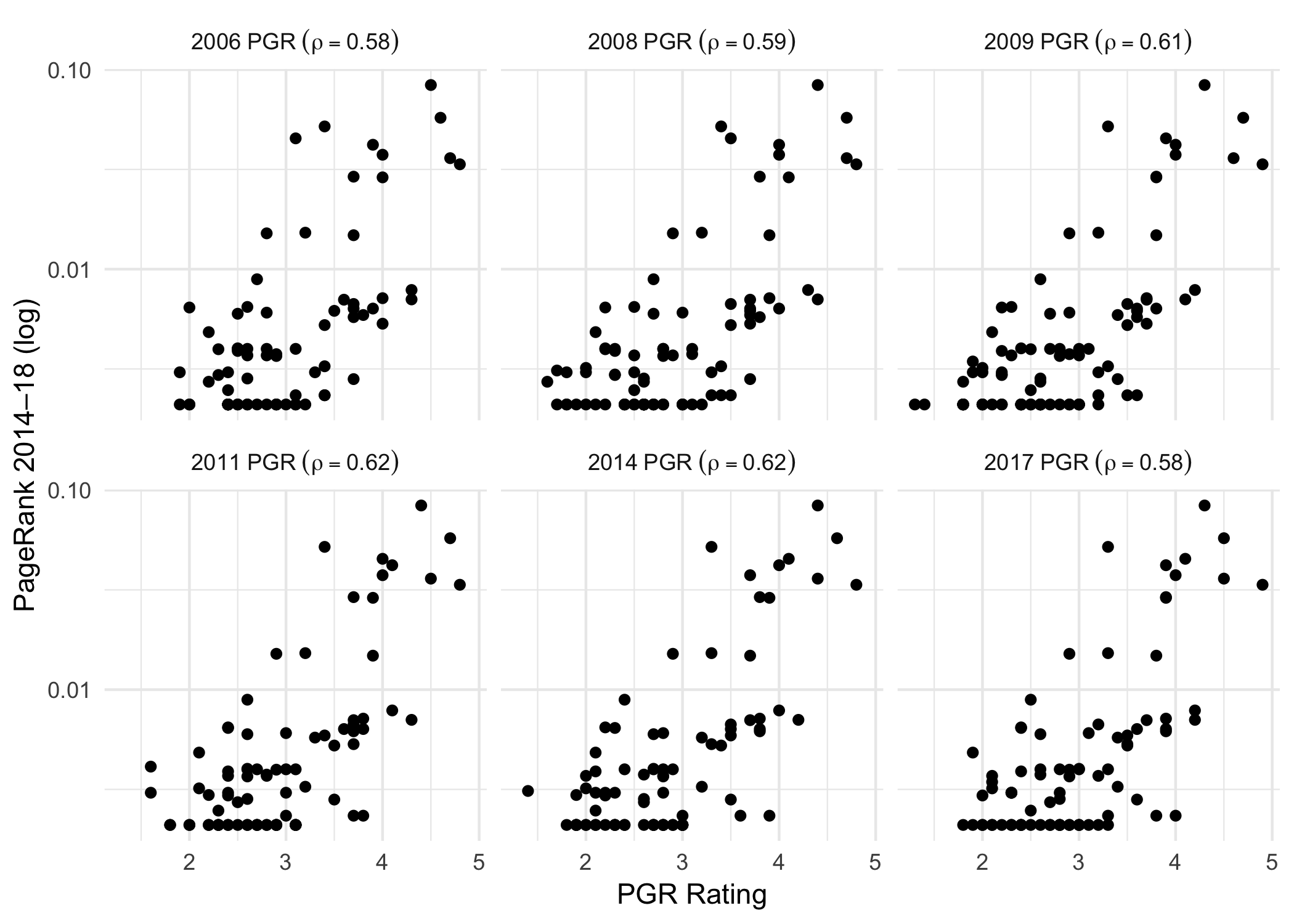

How well do PGR scores predict these rankings? Following Wallace we’ll start with the 2006 PGR. But we’ll also consider the five iterations since. I’ve put the PageRanks (the y-axis) on a log scale for visibility.

Overall the correlation looks pretty strong, ranging from 0.66 in 2006 to 0.73 in 2014. And that seems consistent with Wallace’s original finding, a correlation of 0.75 between the 2006 PGR and all placement data up through 2016.

Notice though, the connection gets much weaker when PGR ratings are lower. Below a PGR rating of 3 or so, PageRank doesn’t seem to increase much with PGR rating: the average correlation is only 0.25.

We have two conclusions so far then. First, as Wallace found, PGR rating seems to predict PageRank pretty well, even when the ratings are collected almost a decade in advance. But second, this effect is much stronger for programs with high PGR ratings.

Predicting PageRank from PageRank

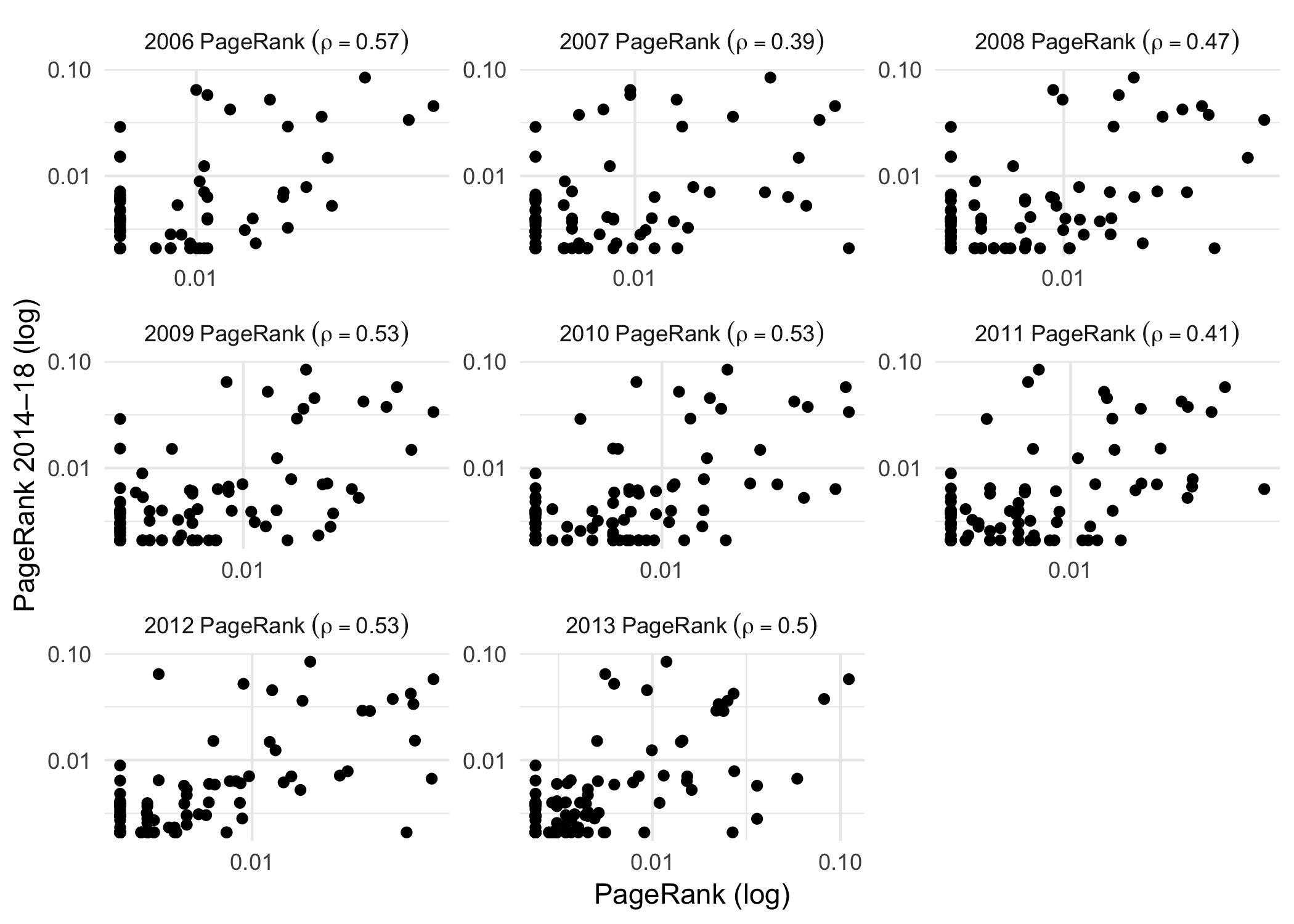

What if our hypothetical grad student had relied on the available placement data instead of PGR scores in deciding where to go?

Well they’d probably have been better off using the PGR in 2006. But PageRank gets pretty competitive with PGR starting around 2010, when the placement data gets richer.

It might be that PageRank has the potential to be a better predictor—even long range—provided we have enough placement data. Or it might be that PageRank is only better at close range. We can’t be sure, but hopefully we’ll find out in a few years as the APDA database grows.

Tenure-track Only

Finally, let’s do the same analysis but counting only tenure-track placements toward a department’s PageRank. Here are our top 10 programs then (a more similar group to what we saw last time, note).

| Program | PageRank 2014-18 |

|---|---|

| Princeton University | 0.0837437 |

| University of Pittsburgh (HPS) | 0.0640854 |

| University of Oxford | 0.0573890 |

| University of Chicago | 0.0519388 |

| Yale University | 0.0452552 |

| Harvard University | 0.0420271 |

| Massachusetts Institute of Technology | 0.0374587 |

| Rutgers University | 0.0360075 |

| New York University | 0.0335406 |

| University of California, Berkeley | 0.0290834 |

Here are the comparisons with past PGR scores.

And here are past PageRanks.

Unsurprisingly, restricting ourselves to TT placements makes things noisier across the board. PGR-based predictions show more resilience here, possibly because they’re only affected by the added noise at one end.