We’ve all been there. One referee is positive, the other negative, and the editor decides to reject the submission.

I’ve heard it said editors tend to be conservative given the recommendations of their referees. And that jibes with my experience as an author.

So is there anything to it—is “editorial gravity” a real thing? And if it is, how strong is its pull? Is there some magic function editors use to compute their decision based on the referees’ recommendations?

In this post I’ll consider how things shake out at Ergo.

Decision Rules

Ergo doesn’t have any rule about what an editor’s decision should be given the referees’ recommendations. In fact, we explicitly discourage our editors from relying on any such heuristic. Instead we encourage them to rely on their judgment about the submission’s merits, informed by the substance of the referees’ reports.

Still, maybe there’s some natural law of journal editing waiting to be discovered here, or some unwritten rule.

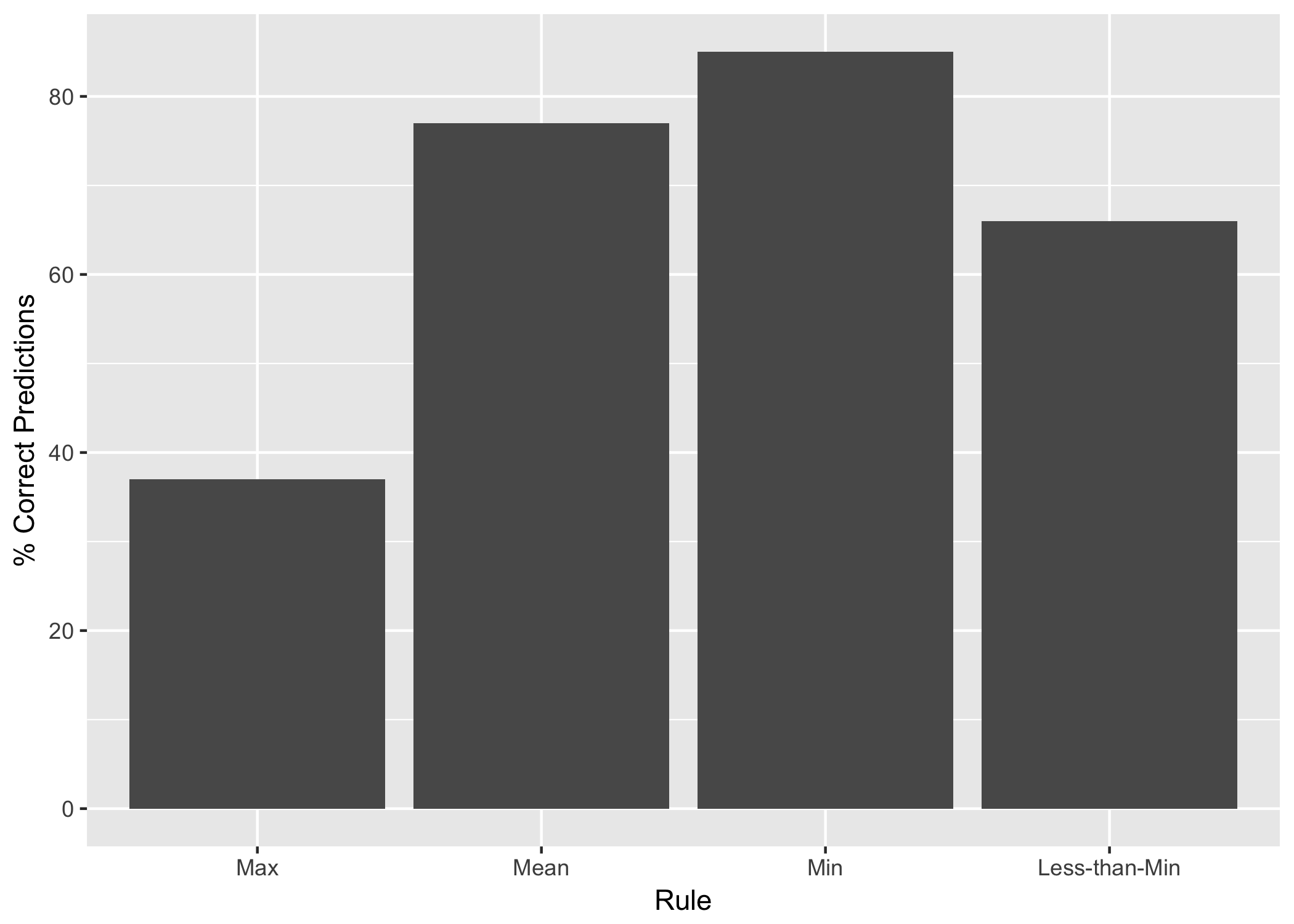

Referees choose from four possible recommendations at Ergo: Reject, Major Revisions, Minor Revisions, or Accept. Let’s consider four simple rules we might use to predict an editor’s decision, given the recommendations of their referees.

- Max: the editor follows the recommendation of the most positive referee. (Ha!)

- Mean: the editor “splits the difference” between the referees’ recommendations.

- Accept + Major Revisions → Minor Revisions, for example.

- When the difference is intermediate between possible decisions, we’ll stipulate that this rule “rounds down”.

- Major Revisions + Minor Revisions → Major Revisions, for example.

- Min: the editor follows the recommendation of the most negative referee.

- Less-than-Min: the editor’s decision is a step more negative than either of the referees’.

- Major Revisions + Minor Revisions → Reject, for example.

- Except obviously that Reject + anything → Reject.

Do any of these rules do a decent job of predicting editorial decisions? If so, which does best?

A Test

Let’s run the simplest test possible. We’ll go through the externally reviewed submissions in Ergo’s database and see how often each rule makes the correct prediction.

Not only was Min the most accurate rule, its predictions were correct 85% of the time! (The sample size here is 233 submissions, by the way.) Apparently, editorial gravity is a real thing, at least at Ergo.

Of course, Ergo might be atypical here. It’s a new journal, and online-only with no regular publication schedule. So there’s some pressure to play it safe, and no incentive to accept papers in order to fill space.

But let’s suppose for a moment that Ergo is typical as far as editorial gravity goes. That raises some questions. Here are two.

Two Questions

First question: can we improve on the Min rule? Is there a not-too-complicated heuristic that’s even more accurate?

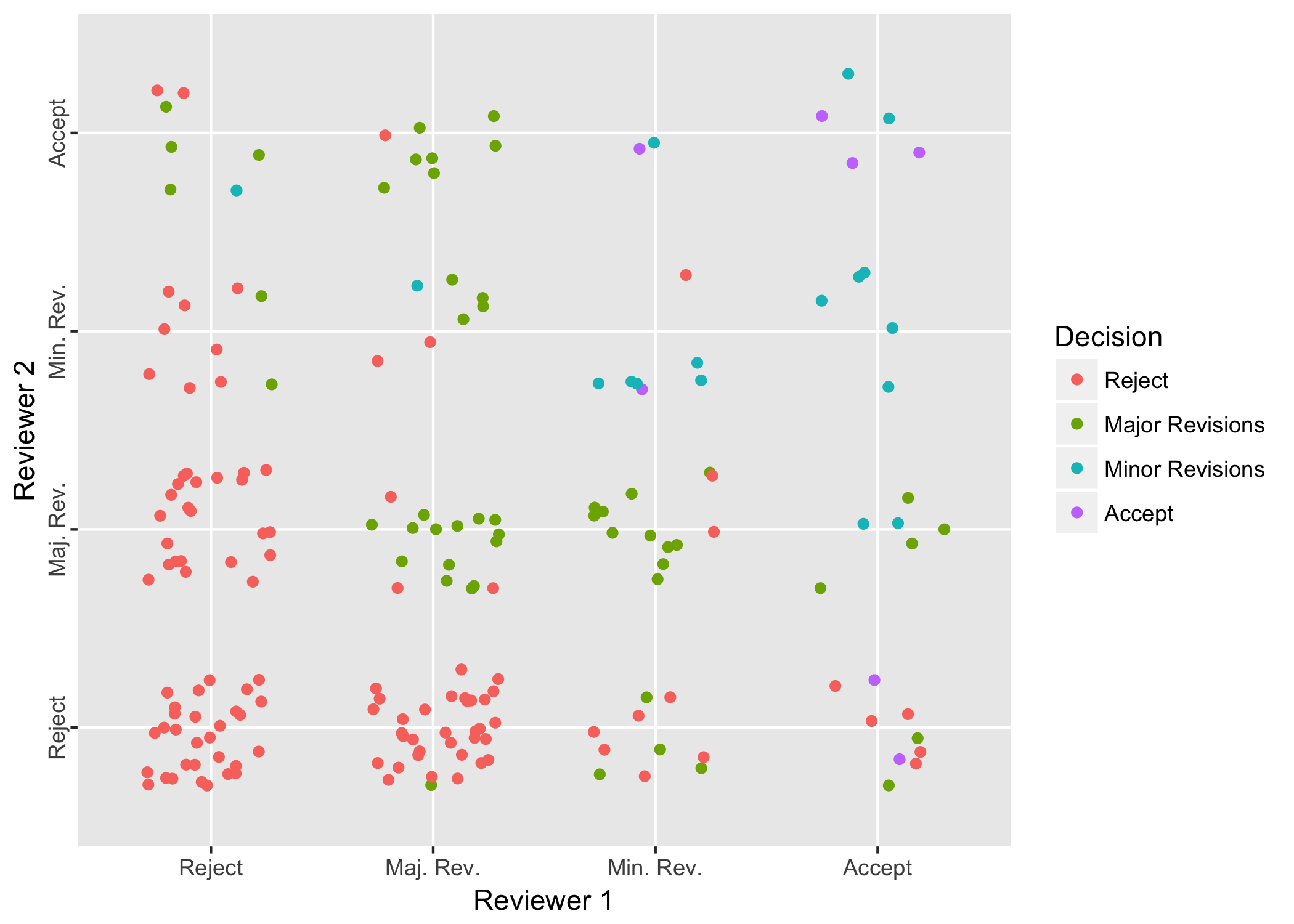

Visualizing our data might help us spot any patterns. Typically there are two referees, so we can plot most submissions on a plane according to the referees’ recommendations. Then we can colour them according to the editor’s decision. Adding a little random jitter to make all the points visible:

To my eye this looks a lot like the pattern of concentric-corners you’d expect from the Min rule. Though not exactly, especially when the two referees strongly disagree—the top-left and bottom-right corners of the plot. Still, other than treating cases of strong disagreement as a tossup, no simple way of improving on the Min rule jumps out at me.

Second question: if editorial gravity is a thing, is it a good thing or a bad thing?

I’ll leave that as an exercise for the reader.

Technical Note

This post was written in R Markdown and the source code is available on GitHub.